Distributed GitLab Runner Caching with MinIO

How to use MinIO as GitLab runner cache storage to help increase job concurrency

Continuous integration allows us to automate testing and deployments so that we can spend more time building and maintaining our sites. Recently we have spent some time optimising our pipelines, so that we can get new features built, tested and live with our clients as quickly as possible; all whilst ensuring no bugs make it into our production environment.

We operate 3 GitLab runners. However, due to our pipelines requiring a cached build of the site and a long outstanding issue with the GitLab runner backend; we are only able to have 1 runner assigned to each project, with that runner's job concurrency set to 1.

This leaves our pipeline completion times bottlenecked. Typically a Craft CMS site may take up around 8 to 10 minutes to complete the full set of tasks shown below.

It's common for us to have 4 developers working on the same project at any one time. With each commit taking up to 10 minutes to go through the pipelines, queues begin to form...

Enter MinIO

GitLab runners support storing build caches on S3 at Amazon Web Services (AWS). This can end up being expensive due to the amount of requests made to AWS and the standard Gigabyte per month rate. Not to mention, we would be bottlenecked by the bandwidth of our internet connection.

MinIO is High Performance Object Storage. Compatible with Amazon S3 cloud storage service.

MinIO allows us to host an S3 API compatible storage server on our local network, meaning all of our runners can be converted to shared runners that cache the build centrally on the MinIO server, subsequently allowing any runner to download the cache and run a step in the pipeline.

Caveats

In the following example, we will not be exposing this MinIO server to the internet as an S3 replacement storage server. This guide is intended to indicate use with local GitLab runners.

We'll be using Ubuntu 18.04.

Initial MinIO Configuration

Firstly, let's get the package lists up to date.

sudo apt-get updateDownload the MinIO server binary.

curl -O https://dl.minio.io/server/minio/release/linux-amd64/minioThere is now a file called minio in your working directory. This needs to be made executable and then moved to usr/local/bin where it is expected.

sudo chmod +x minio

sudo mv minio /usr/local/binIt's not advisable to run MinIO as the root user, so we will create an additional user to own the files and run MinIO.

sudo useradd -r minio -s /sbin/nologinNext change the ownership of the MinIO binary to the user minio.

sudo chown minio:minio /usr/local/bin/minioWe need somewhere to actually store the buckets. You can change the path of this, especially if you want to use a dedicated mounted drive for object storage.

sudo mkdir /usr/local/share/minioAgain, the user minio needs to own this

sudo chown minio:minio /usr/local/share/minioCreate a folder to store the MinIO configuration files

sudo mkdir /etc/minioOnce again, change ownership to the minio user.

sudo chown minio:minio /etc/minioCreate a configuration file. You will need to replace the values of MINIO_ACCESS_KEY and MINIO_SECRET_KEY with your own secure strings. It is also recommended to change the IP address specified in the below example to the IP your GitLab runners will be accessing. Setting it to 0.0.0.0 will expose MinIO on all interfaces.

sudo nano /etc/default/minioMINIO_VOLUMES="/usr/local/share/minio/"

MINIO_OPTS="-C /etc/minio --address 0.0.0.0:9000"

MINIO_ACCESS_KEY="EN0V4T3MINI0EX4MPL3" # Make sure you change this

MINIO_SECRET_KEY="uULZsuquRC4QAHuQQ7Ey22MF2NcbcM2EXAMPLE" # Make sure you change this

MINIO_REIGON="eu-west-1"Running MinIO as a Service

Download the MinIO service script.

curl -O https://raw.githubusercontent.com/minio/minio-service/master/linux-systemd/minio.serviceMove that script into the systemd configuration directory.

sudo mv minio.service /etc/systemd/systemReload systemd

sudo systemctl daemon-reloadLastly, we can now enable MinIO to start on boot and start it up

sudo systemctl enable minio --nowMinIO Web Interface

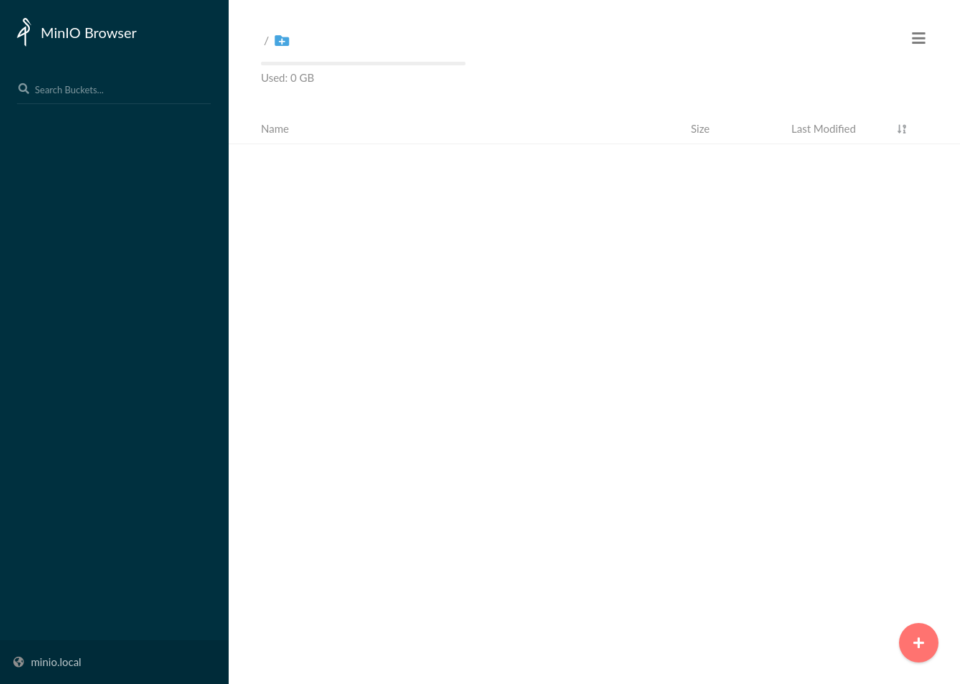

Now that's all done, you should now be able to navigate to the MinIO web interface at http://your-minio-server-ip:9000

You can login using the access key and secret key you specified in the MinIO configuration.

You should see a page similar to the following:

Click on the plus icon and create a bucket, I'd recommend calling it gitlab-runner. As with S3, all bucket names need to be unique, but as this is your own server; we're no longer fighting the rest of the world for unique names.

Once that is done, we are ready to tell the GitLab runners to use our MinIO server for caching.

GitLab Runner Configuration

The following isn't an instruction on how to setup a GitLab runner and will assume you already have a runner setup and working.

First off, let's change into the runner configuration directory and make a backup of the current configuration.

cd /etc/gitlab-runner/

cp config.toml config.toml.bakNow open the configuration in a text editor, you should see something similar to the following.

sudo nano config.tomlconcurrent = 1

check_interval = 0

[session_server]

session_timeout = 1800

[[runners]]

name = "Runner I"

url = "https://gitlab.your-company.co.uk/"

token = "your-gitlab-runner-enrolment-token"

executor = "docker"

environment = ["DOCKER_DRIVER=overlay2"]

[runners.custom_build_dir]

[runners.docker]

tls_verify = false

image = "ubuntu:18.04"

privileged = false

disable_entrypoint_overwrite = false

oom_kill_disable = false

disable_cache = false

volumes = ["/cache"]

shm_size = 0

cpus = "2"

Within the [[runners]] section create a new section called [runners.cache]. Set the type to S3, and indicate this is a shared cache.

[[runners]]

...

[runners.docker]

...

[runners.cache]

Type = "s3"

Shared = true

Within [runners.cache] create another section called [runners.cache.s3] for the S3 configuration. The variables will all need configuring to match your setup.

[[runners]]

...

[runners.docker]

...

[runners.cache]

Type = "s3"

Shared = true

[runners.cache.s3]

ServerAddress = "your-minio-server-ip:9000" # Your MinIO server IP and port

AccessKey = "EN0V4T3MINI0EX4MPL3" # The access key you declared in the MinIO configuration

SecretKey = "uULZsuquRC4QAHuQQ7Ey22MF2NcbcM2EXAMPLE" # The secret key you declared in the MinIO configuration

BucketName = "gitlab-runner" # Name of the bucket you created

BucketLocation = "eu-west-1"

Insecure = trueNow, job concurrency can be increased. I'd recommend initially setting this to the amount of threads available to the runner. However you may decide to alter this either way depending on your use case. It's worth sitting and watching runner load to see if there's any headroom to increase concurrency.

concurrent = 2A full configuration will look something like the following. You'll now just need to repeat this for each of your runners. Once you're happy, click save and watch you pipelines run in unison!

concurrent = 2

check_interval = 0

[session_server]

session_timeout = 1800

[[runners]]

name = "Runner I"

url = "https://gitlab.your-company.co.uk/"

token = "your-gitlab-runner-enrolment-token"

executor = "docker"

environment = ["DOCKER_DRIVER=overlay2"]

[runners.custom_build_dir]

[runners.docker]

tls_verify = false

image = "ubuntu:18.04"

privileged = false

disable_entrypoint_overwrite = false

oom_kill_disable = false

disable_cache = false

volumes = ["/cache"]

shm_size = 0

cpus = "2"

[runners.cache]

Type = "s3"

Shared = true

[runners.cache.s3]

ServerAddress = "your-minio-server-ip:9000"

AccessKey = "EN0V4T3MINI0EX4MPL3"

SecretKey = "uULZsuquRC4QAHuQQ7Ey22MF2NcbcM2EXAMPLE"

BucketName = "gitlab-runner"

BucketLocation = "eu-west-1"

Insecure = trueSummary

You may notice we haven't actually migrated any caches. If you do notice some failing jobs it may be worth running a manual pipeline to ensure all stages are run. Telling GitLab to clear the runner caches could also help to alleviate this issue.

I hope this guide helps you integrate MinIO into your GitLab workflow, and subsequently speeds up your pipelines. We would love to know how you got on using this guide, so let us know below!

You might also like...